Deaf people primarily communicate through sign language, so understanding spoken languages can prove challenging. To bridge that gap in communication, the HoloHear team built a mixed reality app at a Microsoft HoloLens Hackathon in San Fransisco that translates the spoken word into sign language.

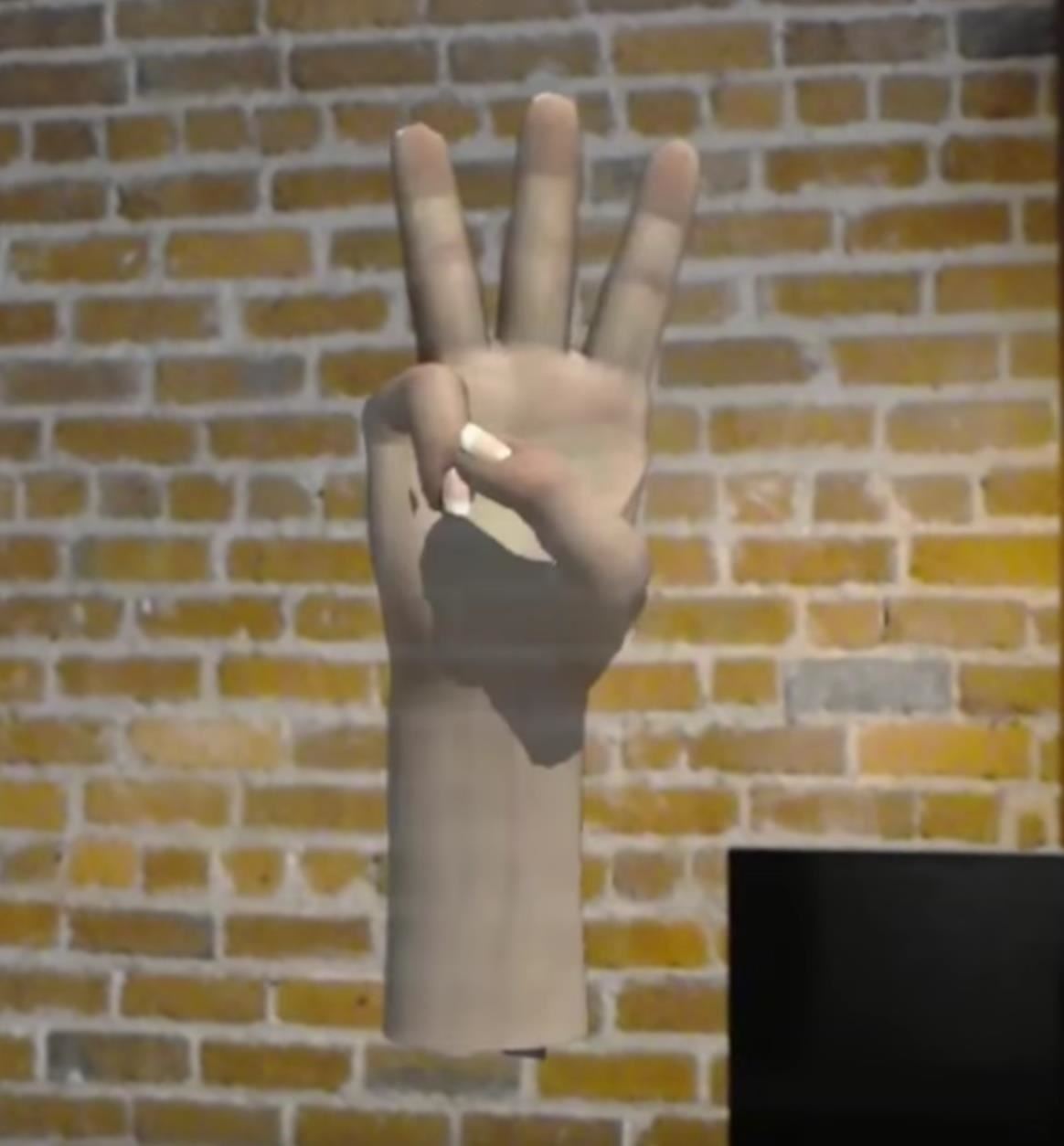

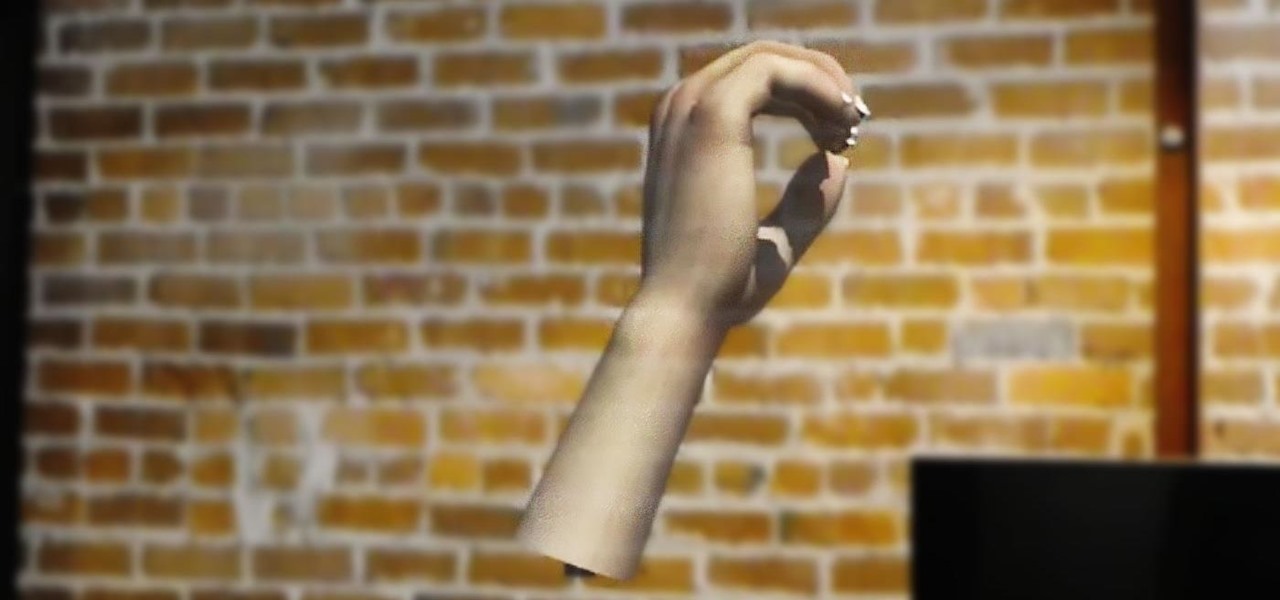

As you'll see in the video above, HoloHear not only translates the spoken word for the hearing impaired, but can also teach a hearing person sign language. When someone speaks aloud, a 3D holographic model appears to show the sentence using American Sign Language (ASL) in close to real time. In listen mode, deaf people can understand speech. In learning mode, the hearing can see what signs to make based on the words they use.

While the app is still rough around the edges, bear in mind that the team created it in 48 hours. It's already functional and useful, but with a little polish it could be very helpful in future communication between the hearing and hearing impaired.

It also has a limited usefulness on the HoloLens, as the device is expensive, only available to developers, and cumbersome to wear when you just want to have a conversation. This sort of augmented reality app seems like it'd feel more at home on a smartphone. At least it would reach more people that way.

Regardless, HoloHear demonstrates how augmented reality can help us communicate better with one another. Headsets can seem like tools of isolation, but when we develop software that aides in human interaction, we prove how important this technology can really be.

Just updated your iPhone? You'll find new features for Podcasts, News, Books, and TV, as well as important security improvements and fresh wallpapers. Find out what's new and changed on your iPhone with the iOS 17.5 update.

2 Comments

Very cool. Learning mode in particular is a stroke of genius. For listen mode, I'd think that simply displaying the transcribed text would be more practical. Still, a brilliant idea for a mixed reality app, and incredibly impressive that they managed to build a working prototype in 48 hours.

Although this is great to see technology trying to assist in translation and learning to bridge the gap between spoken languages and signed languages, this is a gross over-simplification, especially the '48 hour' remark, that has led to many sign language communities and cultures, to feel underwhelmed and misunderstood by technologists.

I truly hope this project has continued, and has given the credit where credit is due in understanding the needs and complexities of the sign language communities abroad.

Share Your Thoughts