When Microsoft unveiled Mesh a couple of weeks ago, the move revealed a major part of Microsoft's next steps toward dominating the augmented reality space, particularly with regard to enterprise customers.

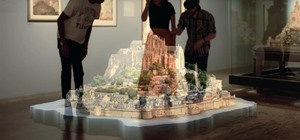

But aside from the general details contained in the announcement, what really got some insiders excited was the demo video (see below) accompanying the launch of Mesh. It looked like something out of Iron Man's holographic lab, but backed by very real sensor and display technology.

• Don't Miss: Microsoft Unveils Mesh Platform for Collaborative HoloLens Apps, Previews Pokemon GO Proof of Concept

So was the video accurate? If so, how could some of the things in the video be accomplished? And if Microsoft is really focused on the enterprise space, what was the meaning behind including consumer-focused examples, including that amazing Pokemon Go proof of concept? In order to unpack what Mesh really means for the HoloLens 2 and the AR space in general, we brought Microsoft's Greg Sullivan onto the virtual stage of Twitter Spaces to answer all of our questions about Mesh.

The following is an abbreviated version of the conversation, but if you want to hear the full chat, you can listen to the video embedded at the bottom of this page.

Adario Strange: First of all, referencing the beautiful Mesh demo video, it's incredible. I think a lot of people got excited by it is because it not only looks like science fiction, but we now have the technology to do these things via the HoloLens and Kinect. But one person raised the question: Hey, wait, what they're talking about right now is possible via simplistic avatars, but the more realistic, volumetric, holographic looking imagery that was represented in the video requires sensors. We all kind of sussed that out on our own, and we think we know that that is the case regarding Mesh. But I wanted to just let you go on the record and describe what exactly we're seeing in the video in terms of what's possible with Mesh.

Greg Sullivan: The short answer is: yes, that is correct. The way we describe it is that one of the core capabilities of Microsoft Mesh is a sense of presence, giving you this true ability to feel like you are in a physical location that is hundreds or thousands of miles away from you. One of the ways that we represent your presence remotely falls somewhere on a spectrum that you've described. At one end of the spectrum, the more simple end of the spectrum, is an avatar. We started with the AltspaceVR avatar system, and the team has brought that over onto Azure, so now it'll inherit all of the enterprise-grade management and security and other things that folks expect from Azure.

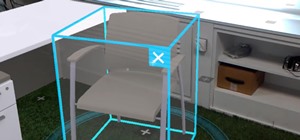

But that's kind of the simplest form, the simplest end of the spectrum. If you are wearing a HoloLens, [it has] the positional tracking and environmental sensing cameras, and so the sensors in the HoloLens are able to follow you as you move. Interestingly, in Mesh, one of the cool things about HoloLens 2, of course, is that it does fully articulated hand tracking. As you've seen, HoloLens 2 looks at 25 distinct points of articulation on each hand. And so when you're wearing a HoloLens and you're using Mesh, you will be represented as an avatar, but that avatar will have the same full degree of hand motion that you do in HoloLens 2. So that's kind of the simplest end of the spectrum.

And the key phrase that you used was "you need more sensors" in order to have that fully volumetric capture, or to move along that continuum from an avatar to a kind of full holoportation. You need more sensors. The sensors in the HoloLens don't capture your body volumetrically, they capture your six degrees of freedom motion through space and they capture all of your hand motions. But we don't capture your full body.

Now, there's a couple of options…if you look closely at the video you see a reference to the Azure Kinect developer kit and camera. The Azure Kinect depth-sensing camera is the same depth-sensing sensor in HoloLens 2. I think [in the video] they're playing chess or they're working on a table, and you see he's volumetrically captured, but you see only the front of him. That's what would happen if you have one of these Kinect cameras. Of course, you could use other depth-sensing technologies, you could use LIDAR, you could use something else. This particular camera is a time-of-flight infrared camera that we use in Kinect. But that's a step up from just using the sensors built into the HoloLens to track you and to just represent you as an avatar.

Next Reality: So let's say I'm on one end using the HoloLens 2, and the person on the other end is represented by an [AltspaceVR style] avatar, what would they be using? What are the different tools they would use to connect, assuming the other person doesn't have a HoloLens 2?

Sullivan: Before I go into the answer fully, I want to make a distinction between Mesh the platform, and the service—the cloud services that deliver these capabilities that developers can build into their own applications. It's an immersive collaboration cloud service. Any developer can write in the same way that any game developer, writing a game for Xbox [for example] can automatically make it a multiplayer networked game by plugging into Xbox Live. Mesh makes any application an immersive, collaborative mixed reality application by plugging into the Mesh SDK and adding that capability to your app. So that app could run on HoloLens, it could run on Oculus…you could be in a Windows Mixed Reality headset, you could be on a PC or a Mac, or a smartphone. So the execution environment where you run a Mesh-enabled application, that's cross-platform.

The vision is, regardless of what device you're on, you should be able to participate to some degree in Mesh. And you should be able to run an application that is Mesh-enabled so that you can collaborate in three-dimensional virtual spaces with your friends and colleagues. Now, there's a difference, depending on the hardware that you're using. If you and I are both wearing HoloLens 2, we could go in and run a Mesh application and we would be represented by avatars, and we could walk around each other and collaborate.

But if someone joined that meeting, and they're sitting at their laptop, they are on a two-dimensional screen. And they don't have positional tracking built into their device. So if they walk around their room, they don't move, they would have to [use] cursor navigation, but we could invite that person to our meeting. We don't have this built into the app yet, but ultimately, that person would be able to join via a Mesh-enabled version of Microsoft Teams. We did commit to that—we didn't commit to a timeframe—but we will have a Mesh-enabled version of Teams.

So the idea here is twofold. The first is that if we build this thing and said that you can only use it if you have a HoloLens, that pretty much limits the number of people who could actually use the thing. There are a lot more people with a smartphone in their pocket than with a HoloLens. And so we looked at all of the endpoints, and all of the ways that people today are starting to experience mixed reality, whether it's Pokemon GO or other things. These smart devices have AR frameworks, iOS and Android, ARCore and ARKit, and we can add capability so that developers can build an app for that platform, whether it's an iPhone, or Mac or a PC, and they can add Mesh capability to their app.

Next Reality: I think part of some people's initial confusion with the Mesh demo video and the name was, often, when we're thinking about the world of 3D and the metaverse, when we hear the word "mesh," in general, we think of this kind of virtual layer over everything. Whereas, in this case, "mesh" actually means bringing [various immersive platforms] together. "Mesh" doesn't necessarily mean the same thing as what we would normally come to think of as a "mesh," in the 3D sense. Do I have that right?

Sullivan: Yes, we realized that there were existing interpretations of the word mesh, but I think it kind of fit well because what we're talking about is a substrate that enables connection between people and the ability to feel present.

Next Reality: Here's a touchy question: A lot of this feels like Spatial. I know you guys have had a very friendly, close relationship with Spatial over the last couple of years. So I'm just wondering, does this impact in any way what you guys are doing with Spatial? Is there still a relationship with Spatial? Do you feel like there's any kind of overlap or potential road bumps between you guys now?

Sullivan: That's a great question. I'm not personally involved in that relationship, I know [HoloLens inventor] Alex Kipman is personally involved, and he will tell you that he's working very closely with the folks at Spatial. Here's how he described it to me, the Spatial team is a startup. It's a pretty small outfit.

The longer answer your question about Spatial is that we're solving a problem generally. So that any developer can build in collaborative, shared immersive experiences into their application. And this is going to mean that Spatial—and this is what Alex has talked to them about—they can work with us on that plumbing maintenance of the collaborative substrate piece, and then they can add, and kind of focus some of their investments and their resources on things to differentiate their service and their application.

Next Reality: You know what I'm thinking is, this is less that Mesh is necessarily competing directly with Spatial, it's more than Mesh is now allowing a lot of other developers and startups to better compete with Spatial.

Sullivan: Yeah, and they've got a head start. They've got a very clear idea of the value proposition that they're offering. And in some ways, this will enable them to even increase the focus on their offering. This is an idea that has happened before in our industry, right? There's a really hard thing to do that offers a lot of benefits. And so somebody solves that problem once, and then people look at this and say, "Boy, how many times do we want to solve this problem?" Do we want to keep solving it for every application? Or is there a generalized approach that someone can provide that presents us with a foundation upon which we can all build our own kind of interpretation and go off to meet the needs of our specific audience?

Next Reality: That example reminds me of the web browser days, when everyone was trying to figure out what layer to use to access the internet in a more user-friendly way. So you're kind of saying this is almost like a web browser for reality, meaning Mesh, allowing people to create various web browsers for reality.

Sullivan: It's almost more like some of the underlying standards…here's how some of these foundational plumbing issues can be solved. And then you can build on top of that.

Next Reality: What are the cost tiers for accessing Mesh? Is there a developer and professional and enterprise level? How is this actually going to be rolled out in terms of accessibility?

Sullivan: We haven't announced, we haven't given a lot of detail. We will make Mesh freely available in the SDK for developers to build this capability into any application, in the same way that any game developer can build for Xbox Live at no cost to them. I think you can think of Mesh in the same way for developers, it will be free, they can build it into any app.

Next Reality: I'm guessing that, with the cloud component, there will be some fee structure?

Sullivan: Yes, I think that's a safe assumption. We haven't announced any of the details. Part of what we're trying to do with the preview is get feedback from developers. When we did Kinect for Windows, we saw an explosion of creativity from developers about how Kinect would be used. We saw surgeons in operating rooms, conductors conducting orchestras, there were all these really interesting ways of using the Kinect depth-sensing camera that had nothing to do with Xbox gaming. And that was a direct result of the creativity of developers getting their hands on it. Now that we're in preview [with Mesh], turning this over to developers is going to teach us so much about how this can be used. And so we expect a whole bunch of innovation to happen.

Next Reality: For those who aren't familiar with the various software offerings from Microsoft, give me a basic idea of how Mesh sits alongside or differentiates itself from Azure. The two services are both cloud-oriented, so what differentiates the services?

Sullivan: The way I would say it is that Microsoft Mesh is an Azure service. It is a collaborative, immersive platform that uses Azure to deliver those capabilities. It'll utilize the Azure remote rendering that we introduced a couple of years ago. It uses Azure to manage your identity, whether that's through Azure Active Directory, or through your Microsoft account.

Next Reality: Given what we just experienced in this past year, with the pandemic, where remote work has become the norm, a lot of people are video conferencing. And a lot of people have begun to look at AR and VR as alternatives. Not everyone has waded into those immersive waters, we're still mostly keeping it extremely simple, via video. As someone who's been so close to the augmented reality side of what's happening at Microsoft, with the HoloLens, etc., what have you learned over this past year? Are there any anecdotal situations or learnings that you discovered over the past year with regard to how we can and will use AR moving forward?

Sullivan: For a lot of these use cases, we're able to continue doing our jobs, for those of us who are information workers, in particular, we're still doing our jobs. And it's the ability to do that remotely is entirely because of technology. One of the things that we're also realizing is that it shows the importance of that sense of presence. Everybody's got Zoom or Teams burnout a little bit, and those tools have been amazing because they have enabled us to continue to do our jobs. But it has also highlighted some of the things that we don't get from those experiences. We don't get a sense of a kind of spontaneous collaboration. We don't get the hallway conversations, we don't get the true sense of presence. We don't get the looking, you know, looking me in the eye. There are some things that we really miss, there are some times when you really do need to be in-person and want to be in-person.

But I think one of the things that we've learned over the last year is that some of the meetings could have been emails, and some of the business trips could have been Teams calls. So I think we're seeing how technology can improve the way that we work. For me, personally, I'm not commuting anymore. And boy, that's been a life changer. So I think this is a case where technology can really help us solve some of the problems that we're faced with. And I think that the last year, the pandemic has been an accelerant of the acceptance of that notion. I don't think it has fundamentally changed anything really other than perhaps kind of speeding some stuff up that was likely to happen anyway.

Just updated your iPhone? You'll find new emoji, enhanced security, podcast transcripts, Apple Cash virtual numbers, and other useful features. There are even new additions hidden within Safari. Find out what's new and changed on your iPhone with the iOS 17.4 update.

Be the First to Comment

Share Your Thoughts