A group of researchers from Stanford University and Princeton University has put together the largest RGB-D video dataset to date with over 1,500 scans of over 700 different locations across the world, for a total of 2.5 million views.

This dataset, called ScanNet, has been semantically annotated for use in research projects. The purpose of this type of data, of course, is to teach our future robot overlords to both see and understand what they capture better. In the process, our computer-based spatial understanding will increase significantly. I can't wait — bring it on.

Pattern Recognition

A computer processor can process complex math problems a magnitude faster than the human brain, but it is a completely linear process, handling one problem at a time. On the other hand, the brain handles many problems simultaneously — mathematical and otherwise — dealing with input from many sources (senses), comprehension of that input, then response and output.

Research on recreating our brain with computers has been done, and it took a team 82,944 processors and 40 minutes to produce the equivalent of 1 second of brain activity. Complex pattern recognition, using multiple senses at once, is one of the major differences in our brains and a computer processor.

Pattern recognition is a phrase that some of my friends make fun of me for using way too much. I remember when I read the book of the same name by William Gibson, and the epiphany I had upon understanding the ideas in the book (just like every William Gibson book, for that matter). Not only is it a phrase I say a lot, it is one of the most fundamental underlying cognitive skills we own — a skill that, unless you work in certain medical or technical fields, you likely do not know much about.

As a simple example, I put out a question on Facebook: What comes to mind when you see the phrase "Sit, Ubu, sit! Good dog."

If you watched American sitcoms in the '80s or '90s, this was a closing production logo for Ubu Productions, a company that worked on shows like Family Ties and Spin City. While many of the people who responded did not remember the shows associated with the phrase, they could remember the bark at the end of the clip or the picture of the dog with the frisbee in its mouth. This is a good example of recall as a result of pattern recognition.

The Process for ScanNet

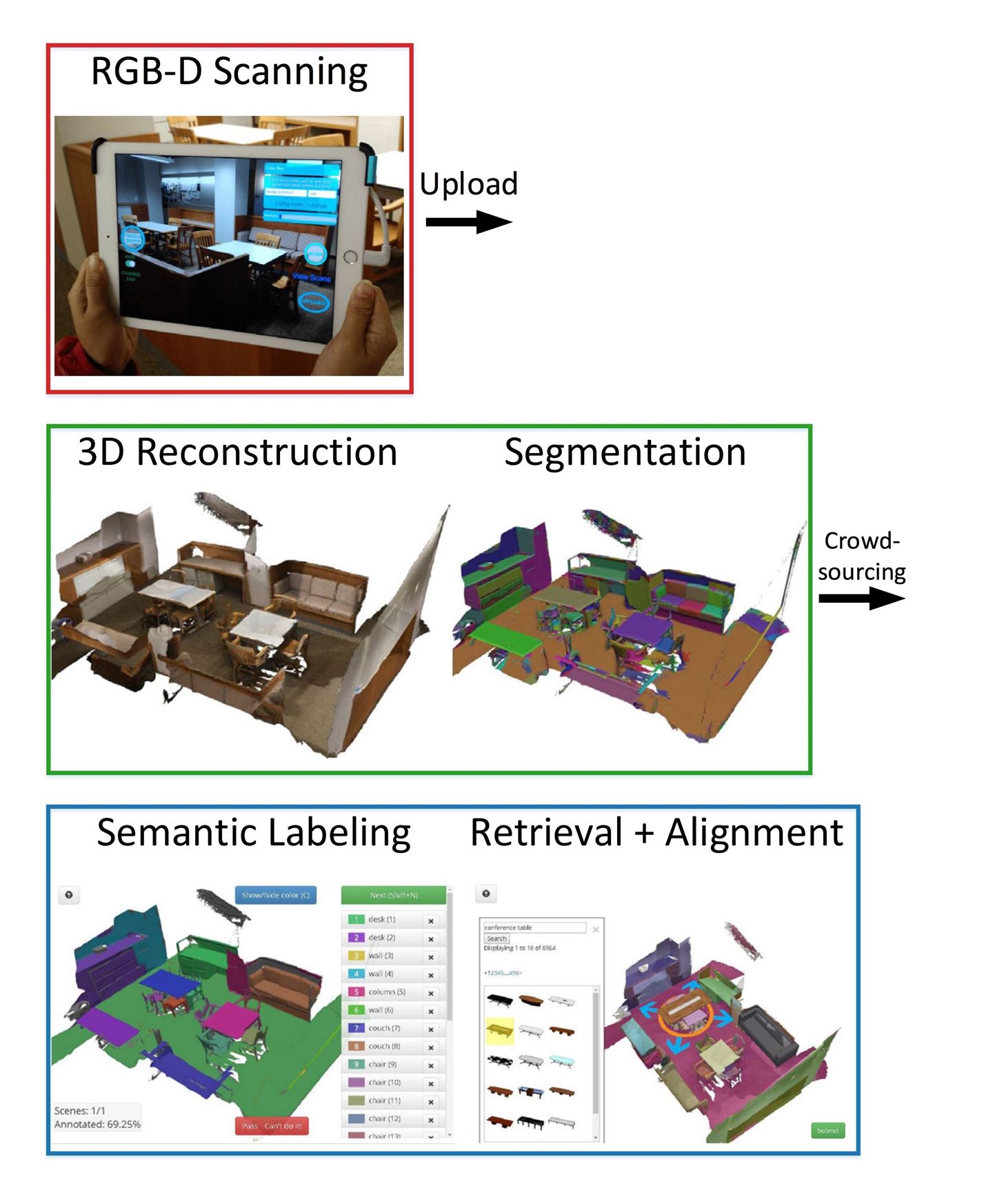

The research team developed a complete start-to-finish pipeline. The entire system is very complex and too technical for this post, but here is an over-simplified breakdown of what they have released in their whitepaper. Starting with twenty iPad Air 2 tablets, equipped with the same Structure sensor as the Occipital Bridge, untrained users simply pointed and took video of an area. The files were then stored on the iPad until they could be uploaded.

Our sensor units used 128 GB iPad Air2 devices which allowed for several hours of recorded RGB-D video. In practice, the bottleneck was battery life rather than storage space.

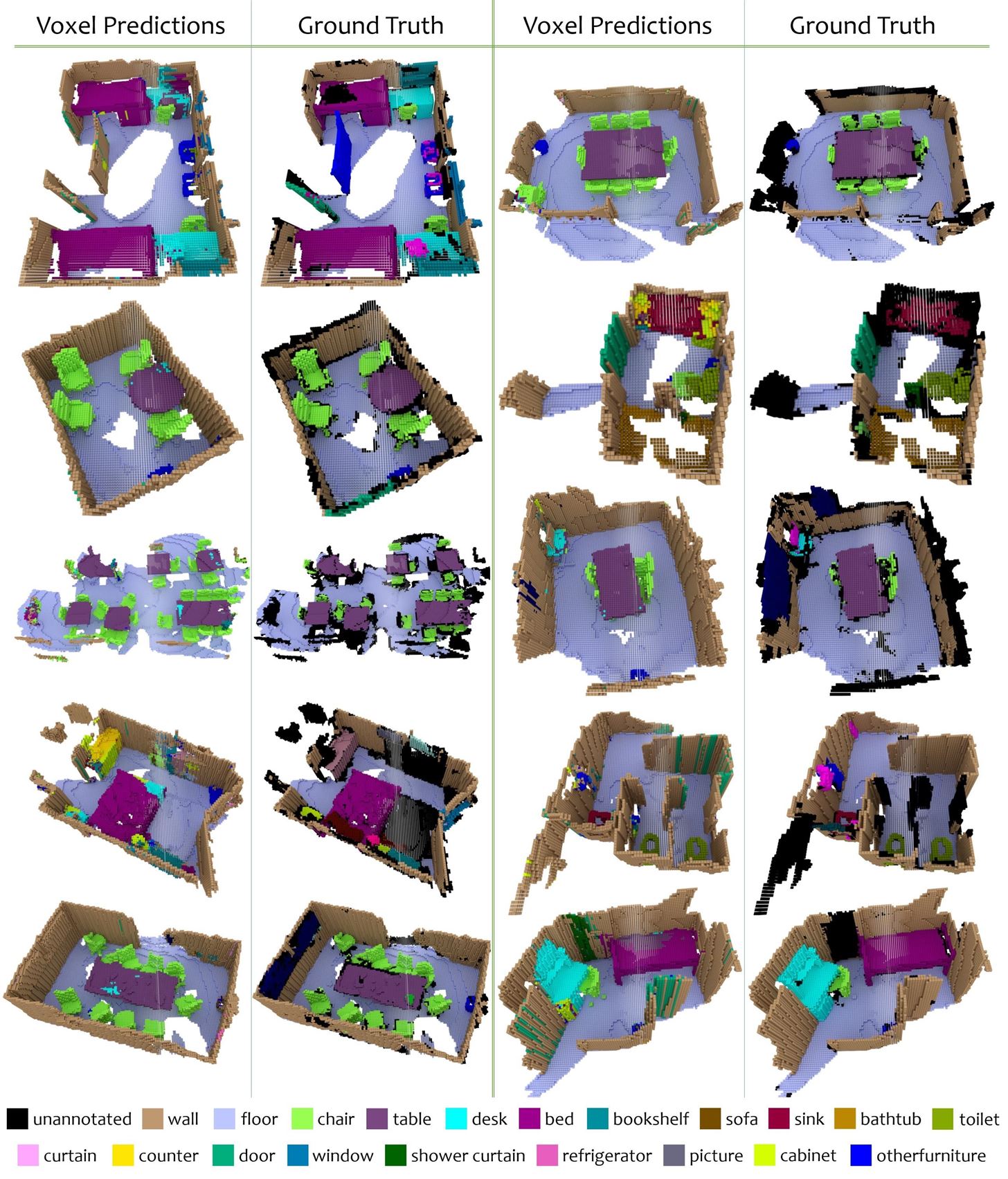

Once uploaded by the users to the processing server, they were processed using a 3D reconstruction process, and then with a complex combination of volumetric fusion, voxel hashing, and various filter processes, to break it down in the segmentation process.

At this point, with a web-based interface, the semantic annotation and labeling process was crowdsourced through Amazon Mechanical Turk, a marketplace for human intelligence tasks (HITs). Once the labeling was complete, the 500 crowd workers were then given a location complete with annotation, and they would match 3D models from a database and align them with the objects in the scene.

What Does This Mean for Mixed Reality Users?

With Google's image recognition and Facebook's facial recognition (which is getting scary good), computers recognizing patterns in 2D images is rather commonplace. Thanks to RGB-D cameras like those used in the Kinect, the infrared depth sensors in the HoloLens, and datasets like ScanNet, computers learning to recognize visual patterns in 3D space will be an actuality in the very near future.

This future will have deeply defined and detailed spatial maps and context-rich IoT information to help easily manage your day-to-day needs in personal and shared mixed reality spaces. The system will know when you are in a kitchen and can make suggestions that may help you there, be it making a grocery list based on missing but commonly used items, or instructions for cooking the frozen lasagna in your freezer.

If ScanNet seems like the type of dataset that would help you conquer a problem you are trying to solve, you can find more information on it at their GitHub repository. While it is under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 License, you will have to fill out a terms-of-use agreement, and email that to one of the team members to get access to it.

Just updated your iPhone? You'll find new emoji, enhanced security, podcast transcripts, Apple Cash virtual numbers, and other useful features. There are even new additions hidden within Safari. Find out what's new and changed on your iPhone with the iOS 17.4 update.

Be the First to Comment

Share Your Thoughts